This Item Ships For Free!

Tensorflow serving gpu store

Tensorflow serving gpu store, Pushing the limits of GPU performance with XLA The TensorFlow Blog store

4.51

Tensorflow serving gpu store

Best useBest Use Learn More

All AroundAll Around

Max CushionMax Cushion

SurfaceSurface Learn More

Roads & PavementRoads & Pavement

StabilityStability Learn More

Neutral

Stable

CushioningCushioning Learn More

Barefoot

Minimal

Low

Medium

High

Maximal

Product Details:

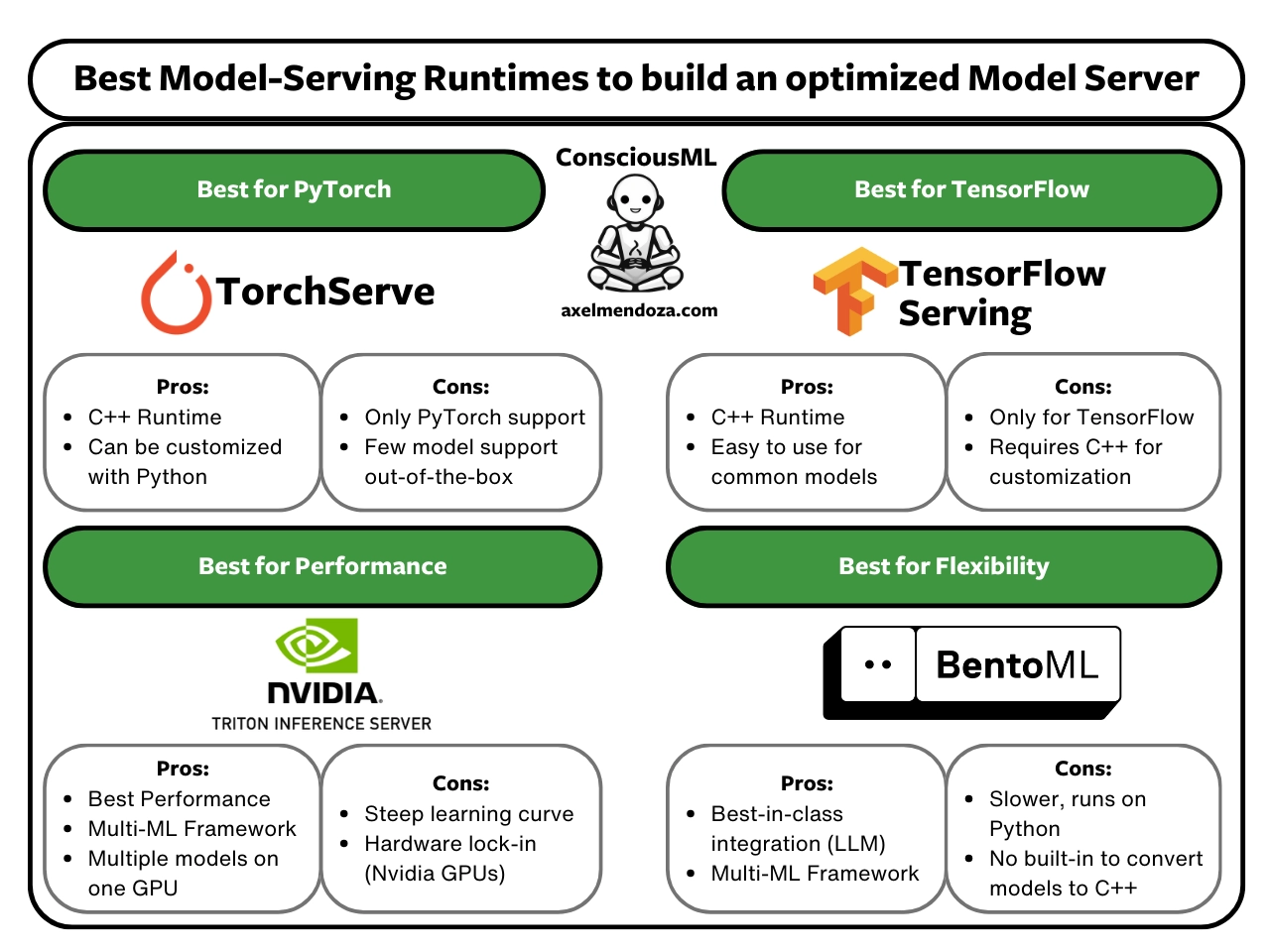

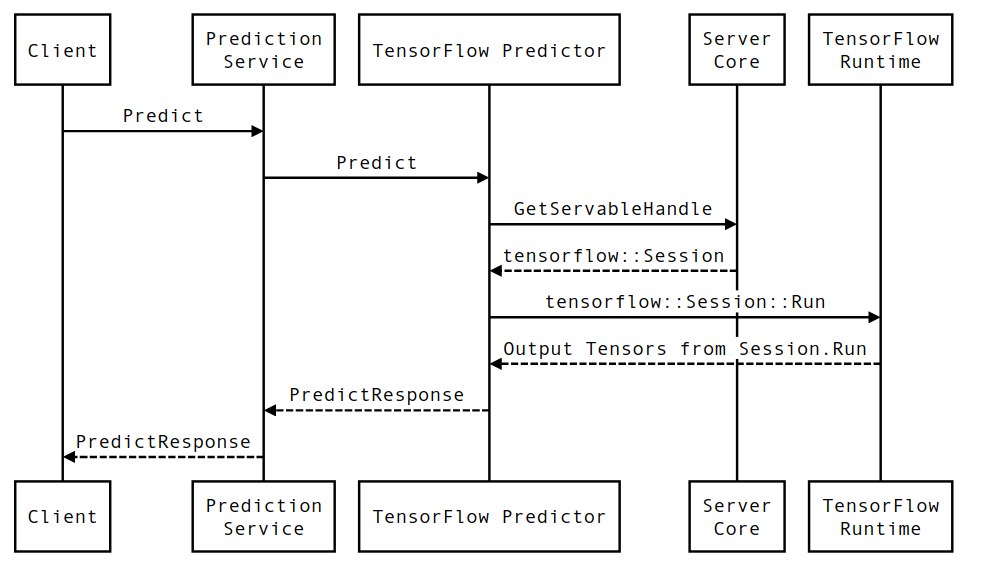

Product code: Tensorflow serving gpu storeTensorFlow Serving The Basics and a Quick Tutorial store, Serving an Image Classification Model with Tensorflow Serving by Erdem Emekligil Level Up Coding store, Load testing TensorFlow Serving s REST Interface The TensorFlow Blog store, Deploying a TensorFlow Model with TensorFlow Serving and Docker A Step by Step Guide using Universal Sentence Encoder USE by Mohit Kumar Medium store, GitHub tensorflow serving A flexible high performance serving system for machine learning models store, TF Serving Auto Wrap your TF or Keras model Deploy it with a production grade GRPC Interface by Alex Punnen Better ML Medium store, GPU utilization with TF serving Issue 1440 tensorflow serving GitHub store, What s coming in TensorFlow 2.0 The TensorFlow Blog store, TensorFlow 2.0 is now available The TensorFlow Blog store, Tensorflow Serving by creating and using Docker images by Prathamesh Sarang Becoming Human Artificial Intelligence Magazine store, Leveraging TensorFlow TensorRT integration for Low latency Inference The TensorFlow Blog store, Image Classification on Tensorflow Serving with gRPC or REST Call for Inference by Sushrut Ashtikar Towards Data Science store, Why TF Serving GPU using GPU Memory very much Issue 1929 tensorflow serving GitHub store, Introduction to TF Serving store, Tensorflow Serving with Docker. How to deploy ML models to production. by Vijay Gupta Towards Data Science store, Deploy models with TensorFlow Serving UnfoldAI store, Optimizing TensorFlow Serving performance with NVIDIA TensorRT by TensorFlow TensorFlow Medium store, TensorFlow Serving API gRPC store, How Zendesk Serves TensorFlow Models in Production by Wai Chee Yau Zendesk Engineering store, Tensorflow serving shop c store, Optimizing and Serving Models with NVIDIA TensorRT and NVIDIA Triton NVIDIA Technical Blog store, Pushing the limits of GPU performance with XLA The TensorFlow Blog store, Google Launches TensorFlow Serving DATAVERSITY store, What is a Model Serving Runtime store, Performance Guide TFX TensorFlow store, tensorflow serving gpu only one thread is busy Issue 1505 tensorflow serving GitHub store, Install Jupyter Notebook and TensorFlow On Ubuntu 18.04 Server store, Performing batch inference with TensorFlow Serving in Amazon SageMaker AWS Machine Learning Blog store, How to serve a model with TensorFlow Intel Tiber AI Studio store, Performance simple tensorflow serving documentation store, Docker outlet tensorflow gpu store, HetSev Exploiting Heterogeneity Aware Autoscaling and Resource Efficient Scheduling for Cost Effective Machine Learning Model Serving store, Model Deployment on Production Tensorflow Serving Tutorial store, Running TensorFlow inference workloads at scale with TensorRT 5 and NVIDIA T4 GPUs Google Cloud Blog store, Lecture 11 Deployment Monitoring The Full Stack store.

- Increased inherent stability

- Smooth transitions

- All day comfort

Model Number: SKU#7511281